Expenditure Data

In common with other governments around the world, the UK Coalition Government made a commitment to to increasing government transparency {please consult here for successive Prime Ministers commitments to the project: first Gordon Brown, and subsequently David Cameron}. A key initiative under the general umbrella of government transparency is open data – the release to the public of government data, unencumbered by restrictive licenses or fees.

Bristol City Council published it's expenditure from late 2010 onwards and Stephen Fortune working with YoHa has been unpicking the entities of the publicy available data.

A FORENSICS OF THE DATASET

What you eventually see on the Bristol B-Open Data Portal website is the residue of several kinds of relations made possible by the creditors invoice database, the Costing And Financial Management database and the creditors standing information database. These databases are part of apparatus that operates Bristol City Council. Which departments administer the databases and which people wrote the code that filters those vast databases into a manageable 5 column comma seperated dataset were uncovered through the excavation I undertook.

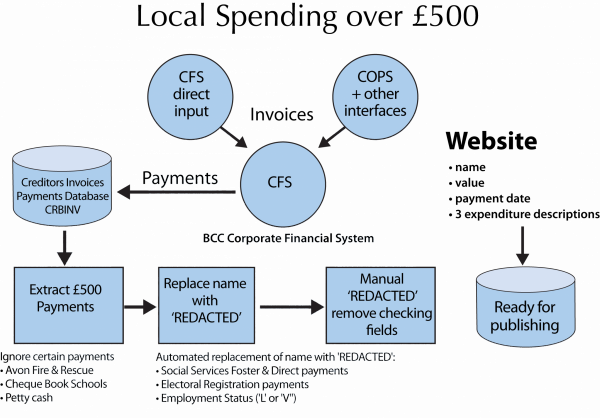

fig 1. Granular Open Spending

The investigation proceeded from the above diagram, an internal workflow detailing the steps by which the database is made amenable to open data. It is via this flow that one facet of the council database is polished for presentation to the public. The additional information conveyed by this workflow is definitely useful: from it you can determine that Avon Fire&Rescue payments, petty cash and school cheque book payments have been excluded from the data set. A 'civic hacker' perusing this expenditure dataset armed with this additional knowledge will be afforded a different perspective, one informed by knowing where this dataset doesn’t represent the councils activities

Though informative this mapping didn’t give much impression of the relational lineage of the database; one black box was opened to reveal several further ones inside. I deemed that sketching my own mapping was the best possible path to pursue. An easy way to illustrate these relations can be seen if we take one mundane record and see what other council (admininstrative) appendages it connects to. The following map illustrates which parent databases the data is drawn from and who is responsible for the hierarchy and encoding systems within those databases.

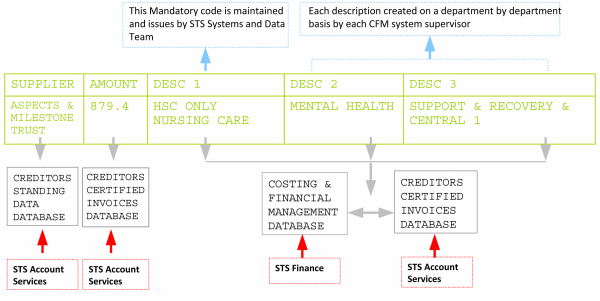

fig 2. sketch of council data origins click here for expanded diagram, and explanation

At the top of the diagram is a typical record from the Bristol City Council's expenditure dataset, beneath are the coded origins of this record. This is an excavation of the relations within a database which may be occluded by the presentation of a flat spreadsheet typical of an open data resource.

Responsible departments are indicated in red boxes while those responsible for the encoding of expenditure categories are demarcated in dark blue. This mapping is not concerned with the data contained in each row of the dataset, but rather who is responsible for the composition of each database and the fields designated therein. The annotation in light blue indicates how the encoding decided by a given department permits certain entries to be excluded from the final expenditure dataset published on Bristol website (for more see Decoding Open Data, below).

This is less an entity/relation diagram and more a bespoke map to understand the various departments and databases which are part of the larger machine of council expenditure, whose total presence is not palpable within the expenditure datasets made available under open data. It also signposts the codes which allow the programmers to filter data. As such everything it illuminates is not present in the final .csv

How well this dataset fares in light of open data and transparency can be evaluated through reference to other datasets forming part of the B-Open Data portal. When YoHa first began working on this project they were encouraged to select from the open data featuring on Bristols B-Open web site. Alongside the council expenditure data stood Air Quality ,Water Quality and Metereology datasets. Those three datasets formed the basis of Mobile Pies Blossom Bristol app, a great instance of a charming interface with citizen science at the core of it's game dynamics.

One of the premises of citizen science is that by members of the population having easy access to the tools to empirically test assumptions about their environment then they will become more rigorous participants in their immediate reality. Citizen science (unsurprisingly) leverages the ethics of the scientific method: the methods of collation and determination are rigorous and repeatable and hence the conclusions reached can be interrogated. As a result you can test those proscriptions laid down to you through objective tools rather than taking authority (whichever authority it happens to be) at its word.

The contrast I'm evoking is akin to apples and oranges, by dint of the nature of the data sets collected (one expressly for scientific [meteorological] research, the other data collected for the purposes of governmental administration and optimisation). Nevertheless the juxtaposition begs an important question in the authors opinion. Can administrative government evoke the citizen science ethos with open data? Is that possible when the residue of the council’s activity is all that is presented to you via this spreadsheet?

DECODING OPEN DATA

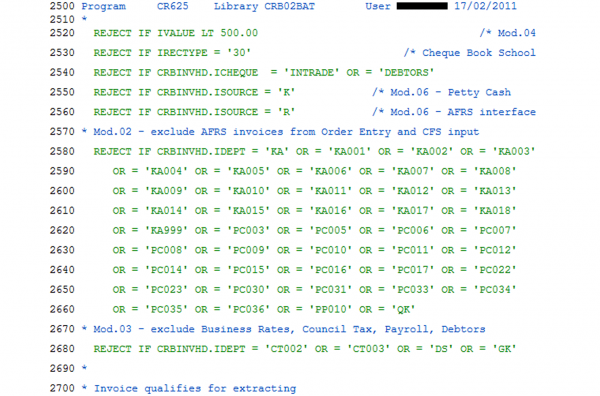

fig. 3 legacy of modifications necessary for open data

I believe it's worth clarifying what I mean by 'residue'. The expenditure over 500 dataset evidences the end of chain, while the resources I uncovered while interrogating the database and the engineers who make said dataset amenable to open data evidence the chain which lead to the datasets inert form. The steps taken by the developers transfix the council data in a new shape, a shape which determines the questions which can then be asked of it (whether by scrolling through 10'000 lines of CSV or by porting the data into a new database). Two scripts are responsible for extracting data from the NATURAL database and recombining it for the Open Data dataset, CR625 and CR626 respectively.

Something I was privy to was a record of changes made to the shape of the dataset (the Open Data CSV, distinct from its originary database). Iterations of the code scripts which pulled the data from its parent databases are filled with developers comments; the comments are like tree rings – illustrating how the database is metamorphosing into the realm of open data by signposting the iterative modifications that were required as Local Governance laid bare the data driving it. Through these comments we can see a record of what data is whittled away from the end dataset: in the above picture we can see reference to the aforementioned modifications which omit the petty cash and cheque book payments, and a lengthy modification which omits the Avon Fire and Rescue (AFRS) Invoices. We can also see additional modifications which exclude business rates, council tax, payroll etc. These omissions are accomplished with reference to alphanumeric codes (IDEPT, and ISOURCE) which are intelligible to the those coders working with the distributed database. The codes are references/indirections to department codes.

Working with Open Data at this nascent juncture affords insight that will perhaps be obscured once the process is codified. Open Data's infancy has been characterised by the (admirable) urge to get the data out onto the web to kickstart momentum. In the rush to publish open data some glitches have slipped through the net. Purely machine readable text sits alongside the ostensibly human readable text (the matter of how 'human relatable' it is being another debate entirely).

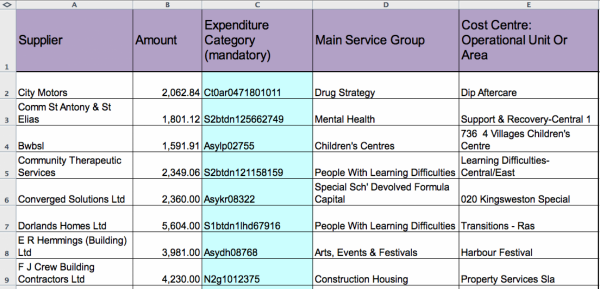

fig 4. open data glitches as informative

In the above diagram we can see that expenditure category, which would usually be expected to hold qualifiers like 'COMPUTER COSTS' and 'PAYMENT TO CONTRACTORS' is instead filled with concatenations of characters and numerals resembling a more arcane or convuluted post code. These aberrant entries provided an avenue into understanding the codes which determine the databases origin (for more I recommend you consult the explanation detailed here). Deciphering these entries is made possible via reference to the Capital & Revenue code. This code is important as it points to the upper threshold of how OPEN open-data can be.

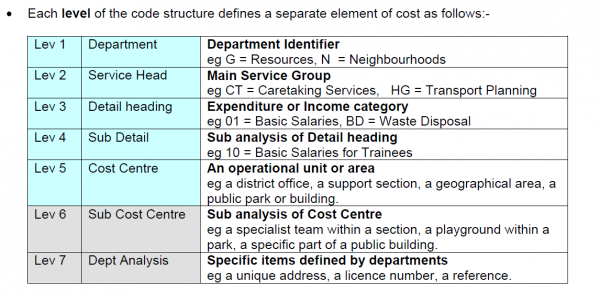

The extent to what the database can relay about the councils expenditure decisions is heavily determined by the granularity the Capital & Revenue code permits; it is an obligatory element to everything which enters the council expenditure database. It defines the valid range of costs (and their subsequent descriptions) as incurred by the council. It’s a concise piece of embedded intelligence, a concept most easily understood via a postcode: the right system can tell an awful lot from this encoding. It is this code which determines the IDEPT codes which were used above in fig. 3 to filter out data not befitting open data expenditure.

fig 5. capital and revenue code

This level of granularity will determine the extent to which the populace can hold government to account should they wish to do so. It tells us what is obligatory for entry and importantly tells us what is optional (elaborate). It informs us of what we can expect from the data. For instance by making levels 6 & 7 (Sub Cost Centre and Deptartment Analysis) optional it leaves the granularity of data recorded subject to the discretion of the CFM System Supervisor (see fig. 2 above). This decision means that while some departments may indeed see fit to record finely detailed records of where public money was spent, right down to the level of which playground within a certain postcode received money, by not making it obligatory it is impossible for it to be compressed into the uniform 5 column wide open data dataset eventually made amenable to the public. This is but one example of how attentiveness to the processes of making data available is a boon to imparting understanding & comprehension, which are necessary if open data is intended to do more than simply bring the data police, the armchair auditors, into the process of outsourcing checks and balances on civic government.

This code is one way in which the database as a machine acts upon the council’s activities. The database becomes the protocol lodged between incomings and outgoing, and in so doing has reinvented the finance machine. Digging beneath the spreadsheet veneer of open data expenditure allows one to see this. The database plugs people into itself at different levels: revealing views of the financial landscape depending on job title. This is an explicit function permitted by the database working on IT officers to produce something known as role based permissions. And so the permissions structure of the Corporate Finance Management system means that no departmental finance officer can see the expenditure of another department. But the database creates views by other means also: it has migrated beyond its original architecture, proffering quite a different view via open data, that of the public looking back at the council machine laid bare. Open data lets us appreciate that the database remakes its subject, in this case the council.

I’m sure my interest in knowing the dry detail of an expenditure databases composition probably confounded and irritated the IT officers who I was pestering by proxy (through my liaison with Mark Newman). I am indebted to both Mark and chief IT officer Pete Truan for their patience.

bnr#32 => Tantalum Memorial, Manifesta7, Alumix, Bolzano, Italy.

bnr#32 => Tantalum Memorial, Manifesta7, Alumix, Bolzano, Italy.